I recently had a crashed VM running on ESXi4 that hung during reboot on the black VMware boot screen.

After many frustrating hours I realised that it was due to one of its many RDMs. Initially the only way to get the VM to successfully reboot was to remove all the RDM disks. I then proceeded to add the RDMs back in one by one with successive reboots to make sure there where no issues.

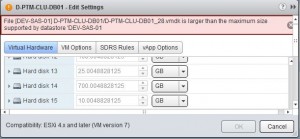

I identified the faulty RDM when I went to add it back in. I received the following error message.

File [datastorename] foldername/filename.vmdk is larger than the maximum size supported by datastore

A few online docs pointed to a few areas to investigate but none seemed to be my issue. The datastore was formatted on VMFS3 with an 8 MB block size. More than sufficient to handle a 10 GB RDM.

I checked the vmware.log file of the VM. There was one error that repeated itself multiple times.

A one-of constraint has been violated (-19)

I tried adding the disk in as both a Virtual and Physical RDM without success. I tried added it to a different VM without success. As a final resort i mounted the volume from the SAN to a physical Windows server. While I was expecting a corrupt volume I was surprised to find it in a clean state with accessible data.

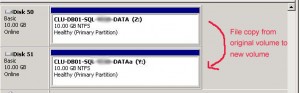

As much as i would have like to keep troubleshooting the issue time was a factor. I created a new 10 GB volume. Mounted this new volume to the physical server. Then performed a simple file copy from the original volume at issue to the newly created volume.

I un-presented the volumes to the physical server. Then back in ESX, went through the process of adding the newly created volume as an RDM and ignoring the original faulty volume.

In conclusion, it’s time to get the hell away from these RDMs. There’s just little justifiable reason to keep using them nowadays.

Worse yet, as I’m told, I may have resolved the issue but haven’t found the root cause.

Appendix

VMware KB article to RDM error